Artificial intelligence pioneers need to stop obsessing over themselves

Mimicking the human brain will only hinder the pursuit of true artificial intelligence, says Facebook’s director of AI research.

Dan Robitzski • September 18, 2017

While biology should be a source of inspiration, evolution and computer scientists pursue different goals. [Image Credit: Wikimedia Commons / Michael Royon | CC0 1.0]

Some believe that mankind was designed in the image of its creator. And when it comes to true artificial intelligence, something that may be our greatest creation, we’ve tried to do the same. A typical approach for artificial intelligence is to recreate the human brain in digital form. But top scientists say that the inspiration will have to come from elsewhere. In fact, trying to perfectly imitate the human brain would be a waste of time.

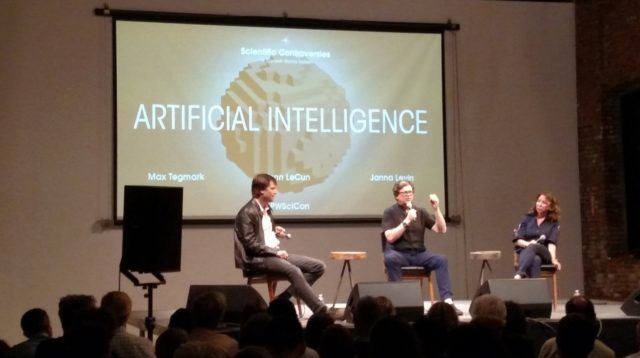

“We don’t really understand the human mind,” says Janna Levin, an astronomer from Barnard College in New York City, while leading a recent panel on the technical and ethical future of artificial intelligence. “We thought we could understand the human mind by mapping it and that didn’t really pan out.”

“How can we generate an artificial mind when we don’t understand the human mind?”

According to the artificial intelligence researchers on the panel, that’s a trick question. We can’t perfectly emulate the brain. Instead, we should be spending our time unlocking the underlying principles of intelligence.

Focusing too much on the brain is simply “carbon chauvinism,” says Max Tegmark, a physicist at the Massachusetts Institute of Technology and the director of the Future of Life Institute. There’s nothing magical about how the brain works, even if its machinations have eluded scientists thus far.

“We’re too obsessed with how our brain works,” says Tegmark, “and I think that shows a lack of imagination.” And history backs him up.

In the Victorian era, an engineer named Clément Ader built the first heavier-than-air flying machines. He modeled them – the Eole and Avion – after bats. The machines were little more than chairs with large bat wings on either side.

Ader sustained several hundred meters of flight in his largely-uncontrollable contraption. But if he was the first, why are the Wright brothers famous when Ader is not?

The third iteration of Ader’s Avion. While it could sustain flight, its steam-powered engines rendered it totally uncontrollable. [Image Credit: Wikimedia Commons / PHGCOM | CC BY-SA 3.0]

But even though creating artificial intelligence in our own image isn’t the way to go, the discussion that night on the panel always returned to biology. As Levin put it, human intelligence and consciousness are still our best examples.

“You can get inspiration from biology, but you don’t want to just copy it,” says Yann LeCun, director of AI research at Facebook and the other panelist for the evening. “Retracing evolution will be very difficult from an engineering point of view.”

That’s because evolution lacks agency. There was no conscious effort or decision to create intelligent apes. Rather, we are here due to millions of years of random mutations that happened to that let us survive long enough to reproduce, if we so desire. Maximizing or simplifying our brains’ capacity for intelligence and reasoning was never part of the equation.

The human brain is complex and convoluted. It’s filled with mechanisms that allow it to self-assemble in the womb and self-repair throughout life. A machine won’t need any of that because we’re handling the assembly. It will just need to take in data, process it, and learn.

Artificial Intelligence pioneer Yann LeCun speaks at the latest “Science Controversies” Panel at Pioneer Works. Also speaking are renowned physicist Max Tegmark and host Janna Levin, an astronomer at Barnard College. [Image Credit: Dan Robitzski]

LeCun explains that for the more traditional approach of supervised learning, humans have to feed thousands of examples into the system before the machine can do meaningful work on its own. For example, an image recognition algorithm will need to see countless apples before it can identify an apple in a photo without error.

The second approach is reinforced learning, in which AI systems or neural nets — algorithms that function similar to our brains — train each other. This approach generally only works in gaming. A chess-playing AI can play millions of games against itself, learning the nuances of the game, in no time at all.

But neither of these methods is perfect. Neither will yield an artificial intelligence that can truly teach itself about the world. Humans still do all the heavy lifting in supervised learning and those chess-playing computers know nothing else.

“We train neural nets in really stupid ways,” says LeCun, “nothing like how humans and animals train themselves.”

Babies learn object permanence by the time they’re around two months old. By the time they’re half a year old, they intuit a great deal about how the physical world works. But we have no way of initiating this same sort of unsupervised learning in our machines.

If anyone would have pulled this off, it likely would have been LeCun and his team at Facebook; only large companies have the resources and architecture to train high-level neural nets. But during the panel he shrugged, “We have no idea.”

This is why a biological basis — not a perfect reconstruction of our own brains — for AI is crucial. There’s no other model for programmers to look to. The human brain is a scientific marvel, but not the only answer. Those researchers need to remember that there’s nothing that’s so special about humanity and the supercomputers hiding in our skulls that they shouldn’t try to create something new.