From index cards to information overload

The perks and pitfalls of scientific databases

Ashley Taylor • January 31, 2012

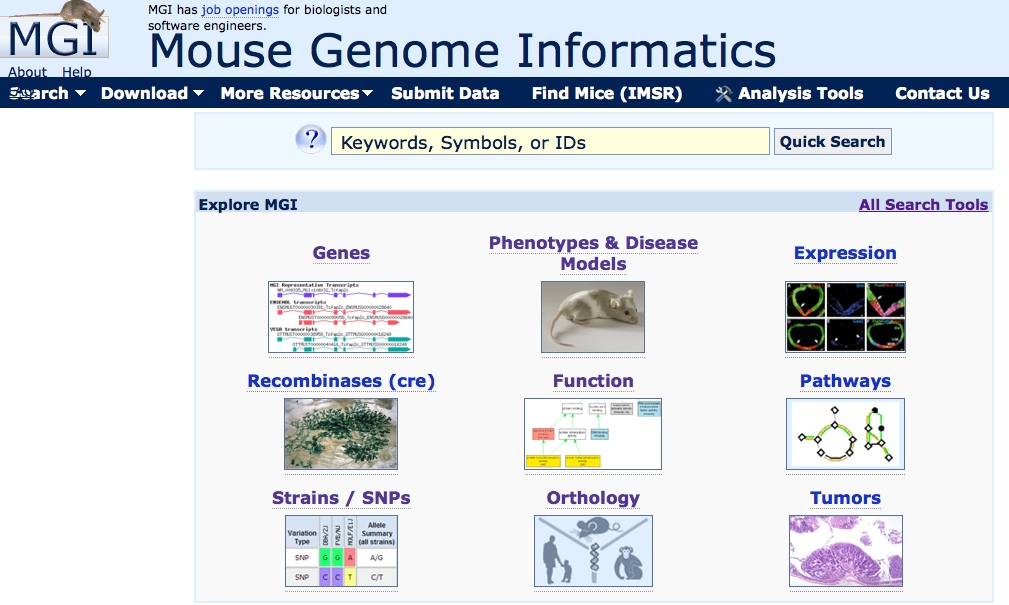

Databases allow researchers to share and access abundant information. [Image Credit: Mouse Genome Informatics]

“And then the lights went on all over the world.”

This is how Tom Roderick, the first researcher to work with a computerized database of mouse genes, remembers the time when scientists started to recognize the similarities between the genes of mice and humans.

“I was a skeptic at first,” he said. “That’s got to be chance.” But once he started putting human genes along mouse genes in his database, the data convinced him. “It was so exciting when we started to realize that some genes were linked (inherited together) in the mouse and that the same genes, when we could find the same genes in humans, those were also linked together … We found linkages in human and mouse that were the same, and not only were they the same but they were lined up in the same order.”

In the 1970s, Roderick started what later became Mouse Genome Informatics, the database of the mouse genome, which now catalogs over 37,000 mouse genes. Making genetic data available online allowed analyses like the one Roderick remembered. But scientific databases don’t only apply to genetics; they affect physics, chemistry, geology and just about every other corner of scientific research. For example, the Petrological Database, or PetDB, allows geologists to share their data about the sea floor, which includes such topics as how Earth’s crust is formed and how undersea volcanoes behave.

“You can make comparisons in a flash that you might not even endeavor to look at because of the amount of labor involved,” said Karin Block, geology professor at City College of New York, recalling how she once typed data from articles in scientific journals into giant spreadsheets. “Now you can just download it and take a look and plot it up right away,” Block said.

As useful as it is to scientists, data sharing raises questions about who receives credit when one scientist uses another’s data and also whether scientists can trust data that they did not collect themselves. Furthermore, digitized information is burgeoning so fast that managed databases and publications, even when disseminated online, cannot keep up. As a result, some researchers are now promoting new, Wiki-based (user-generated and -edited) websites for sharing scientific data, such as WikiGenes, which was one of the first of its kind when it was created in 1998 2008.*

When online databases were first developed, they represented a huge change. This was not just the difference between generating an Excel spreadsheet by hand and downloading one from a database. The very idea of organizing information on the computer was a novelty. At the Jackson Laboratory, a longstanding mouse genetics institution in Bar Harbor, Maine, scientist Margaret Green used a set of index cards to record data about mouse genes and how they seemed to be related. When Roderick, the man who coined the term “genomics,” inherited the task of maintaining this data set — in the early 1970s — he said that he realized that they needed a way to look at the data without “shuffl[jng] through cards and papers.”

The first incarnation of the mouse genome database was simply the information from these cards entered into the computer, in the same way computerized card catalogs replaced paper ones. Roderick said, “I was so excited about this, putting this into the computer, that I went over to the lab at night to do it, and I signed into the lab so often that … they gave me [ID] card number one.” Now Mouse Genome Informatics has the entire mouse genome sequence, information about the proteins the genes encode, and, as always, information about how mouse genes relate to their human counterparts.

Not only do databases let scientists do their experiments more easily, but they also suggest new experiments to do. Trolling databases for correlations and developing hypotheses about what those correlations might mean has become a common practice in science. Roderick thinks that hypotheses that emerge from databases show one of their great strengths: “Databases give you the opportunity to generate information about correlations that you might not otherwise even think about, and suddenly you find an association through a database that says, ‘hey, there’s something there,’ … and you may then want to set up an experiment to prove it further.”

But databases also raise questions about quality control, say scientists such as James Day, who avoids the Petrological Database for that reason.

Day, a geologist at the Scripps Institution of Oceanography, in La Jolla, California, has used PetDB but prefers to generate his own spreadsheets from scientific papers because he thinks it’s important to look at the paper behind every dataset and be confident about how the data were collected. “I need to know what I’m plotting when I’m plotting data against my own.” He acknowledges that making his own spreadsheets slows down his research, which is focused on “how planets form and transform.” He explained, “If I was a little less careful and wanted to save time, I could just use PetDB. But saving time and doing the right thing are quite different.”

On the other extreme, scientists such as geologist Claude Herzberg at Rutgers University sometimes do analyses relying exclusively on data that others collect and post to databases, a process called data mining.

For example, in a 2004 paper about the behavior of the ocean floor, which Herzberg called a “more comprehensive analysis of what others had done,” Herzberg credits PetDB but is the paper’s sole author, even though he acknowledged that he relied on “thousands and thousands” of data points collected by others.”**

What bothers University of Florida Geologist Michael Perfit is that researchers sometimes download data and use it without going back to read the papers written by the scientists who originally collected the data. When that happens, the original researchers are less likely to get the credit, via citations, that they deserve for their labors.

This is what Perfit says happened to him. In the 1990s, the early days of PetDB, Perfit and a student were gathering data from an ocean ridge in the Pacific, called the Siqueiros Transform Fault. In 1996, they published a paper in Earth and Planetary Science Letters, which included some, but not all, of the data they collected. Meanwhile, the student was collecting more data and posting them to PetDB. Then, Perfit said, “at least one group of people wrote a paper that was based upon that data before my graduate student could write it up.” They didn’t cite her, just the database, Perfit said.

“It’s very important for young scientists, any scientists, really, to get credit for the publications that they put out,” Perfit said.

How to share scientific information effectively and give credit where it’s due becomes all the more difficult as researchers collect data and publish experiments and analyses faster and faster. Scientists now put out about 2,400 new journal articles every day, and that’s only those included in PubMed, the main database for life science journals.

“No one can keep up with that,” commented Robert Hoffmann, founder of WikiGenes and a Branco Weiss Society in Science Fellow. WikiGenes applies the Wikipedia model to genetics. Each page represents a gene and includes information about that gene from all organisms — humans, mice and bacteria, for example. Users rate authors to help other users judge the information quality; the author’s name and rating pop up when you move the cursor over the text.

Hoffmann thinks that WikiGenes may end up replacing many scientific journals. “My feeling is that we’ll shift into a much more data-based kind of system where people publish their facts and hypotheses in a more and more machine-readable way.”

The databases that revolutionized science are undergoing a revolution of their own.

*This sentence was corrected due to a factual error, 10:55pm January 31, 2012.

**This sentence was corrected due to a factual error, 10:55pm January 31, 2012.

2 Comments

“My feeling is that we’ll shift into a much more data-based kind of system where people publish their facts and hypotheses in a more and more machine-readable way.”

(Easy) reproducibility is another potential advantage: http://www.jstatsoft.org/v46/i03

Thanks for your sharing. Though a little bit long, I have finished reading this post!