Mind over matter

Envisioning a world of thought-controlled computing

Allison T. McCann • January 12, 2012

This video depicts three hipster hackers hanging out in some pseudo-startup basement, casually describing the ease with which they can command Siri, the new intelligent assistant on the Apple iPhone 4s, using only their minds.

At least it appeared that way.

After the “thought-controlled Siri hack” video went viral, the initial “oohs” and “ahhs” of the tech community were met with anger and frustration after thought-controlled computing experts were forced to explain that the supposed hack was a hoax.

Their disappointment stems from the fact that mind-controlled technology has tremendous potential. Researchers in the burgeoning field sketch out a future in which gamers will navigate mind-controlled virtual worlds, attention deficit children will learn new ways to focus and the severely injured or disabled will use only their thoughts to communicate or move artificial limbs. Many of those applications, in fact, already exist in primitive forms — but are not yet ready to meet people’s outsized expectations.

“I think while we, and others, have accomplished important milestones in this direction, we are far away from achieving complex thought-based control, especially using imagined natural language,” said Gerwin Schalk, a brain-computer interface researcher at the New York State Department of Health’s Wadsworth Center, in an email response to the Siri-hack.

While the level of thought-controlled computing demonstrated in the hoax video may still be far off, brain-computer interfaces (BCIs) have been around for several years. People suffering from paralysis are learning to control robotic limbs, computers and motorized wheelchairs. For those that have lost the ability to speak, BCIs offer a way to imagine certain sounds or words to control computer speakers.

Beyond biomedical applications, companies like Toronto-based InteraXon, a leader in thought-controlled computing, envision a world in which we can move furniture, turn down the stereo or turn off the lights without lifting a finger.

In addition to these luxuries, BCIs could simply be used to better understand ourselves, says Ariel Garton, chief executive officer at InteraXon. “When you wear a brainwave headset, you can see what’s going on inside your own head,” she says. “You can discover more about yourself, and even work to improve yourself.”

Consumer-based BCIs are more difficult to design, however, because the way in which brain signals are acquired cannot be as invasive or inhibiting as most biomedical BCIs, for which patients are willing to undergo surgical implantations.

“One of the hindrances in being able to do this is the ability to get at these [brain] signals in a manner that allows people to live their everyday lives normally,” said Todd Coleman, a bioengineering professor at the University of California San Diego, who teamed up to develop a wearable BCI patch.

The original BCIs can be traced back to Hans Berger’s discovery of electrical activity in the brain in the early 1900s, and his first electroencephalography (EEG) recording in 1924. Modern BCI technology began in the 1970s with research at the federal Defense Advanced Research Projects Agency, but only in the past 20 years have researchers truly been able to understand and manipulate brain signals.

The three most prominent ways of measuring brain activity are EEG, electrocorticography (ECoG) and single neuron-based systems — distinguishable by the source from which the brain signals are taken.

An EEG measures signals from the scalp and is non-invasive, less expensive and safer than ECoG and single-neuron, both of which have to be surgically attached to the brain. Because the two latter systems gather information directly from the cortical surface — ECoG from small areas of the cortex, and single-unit from individual neurons — the data is more robust and therefore more useful in designing accurate computer algorithms.

Single-unit systems, however, can sometimes cause local neural or vascular damage, thus ECoG systems are safer for clinical application.

In 2005, Matthew Nagle, a quadriplegic from the Boston suburbs, received a single-unit interface implant known as BrainGate and successfully demonstrated his ability to open and close a prosthetic hand and control a computer cursor. A project started by John Donoghue, a professor of neuroscience and engineering at Brown University, BrainGate is now beginning its second trial.

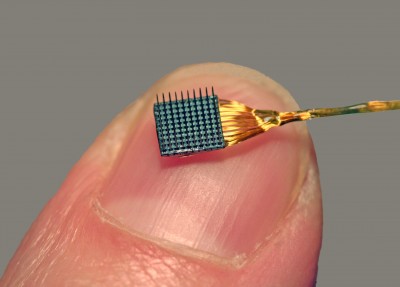

Like the original project, BrainGate2 will implant a tiny internal sensor in a quadriplegic. The sensor will have an array of 100 microelectrodes that will be joined to the neurons responsible for limb movement. The sensor will in turn be connected to external computer processors capable of decoding brain cell activity and turning it into digital instructions. Unlike BrainGate, this trial will also look at the patients’ ability to use communication software in addition to operating assistive devices like prosthetic limbs.

The size of the implanted array for BrainGate2 [Image Credit: Stanford Neural Prosthetics Translational Laboratory]

While BCIs that control motor function are more common, Eric Leuthardt, a neurosurgeon at the Washington University School of Medicine in St. Louis, believes these same methods can be applied to patients that have lost the ability to communicate.

In the most elementary sense of “mind reading,” Leuthardt and his colleagues have already been able to distinguish between spoken sounds and imagined words for some brainwave patterns – allowing patients to control a computer cursor simply by thinking about saying the right sound.

“We can understand different phonemes such as ‘ooh, ee or ah’,” explains Leuthardt. “And we know the brain ‘signatures’ for them, allowing us to put them in various combinations.”

Others are taking a less invasive approach. Jose Luis Contreras-Vidal, a professor of electrical and computer engineering now at the University of Houston, designed a “brain cap” to measure neural activity. Like a swim cap adorned in holiday lights, hundreds of EEG sensors are connected to a neural interface software used to control computers, prosthetic limbs and motorized wheelchairs.

“It’s very exciting — we are essentially reverse engineering the brain,” says Contreras-Vidal. “Learning about inputs and outputs and how these things are put together.”

In some instances, what began as a biomedical BCI might have applications beyond the lab. Together with UCSD’s Todd Coleman, engineers at the University of Illinois designed a thin, temporary tattoo-like patch of EEG sensors no bigger than a Band-Aid, allowing patients to wear the sensor at their convenience — but the researchers also envision the wearable patch being used in areas like military operations or consumer electronics. While the signals from the patch are not as robust as an ECoG reading, Coleman says the patch picks up a signal very comparable to typical EEG methods.

“Video games, interacting in virtual words, or new ways of searching online are all possible with direct acquisition of neural signals,” said Coleman. “Anyone would be able to interact with the world in a ubiquitous, richer manner.”

Others are skipping biomedical applications entirely, opting instead for science fiction-style entertainment. The Emotiv EPOC Neuroheadset uses EEG and runs for around $300. Its associated apps offer everything from thought-controlled video games to the “EmoLens”, an application that allows users to index their Flickr photos via emotion.

“There has been a lot of work recently on figuring out what different signals in the brain really mean,” says Erik Anderson, a post-doctoral researcher in the computer science department at the University of Utah who uses the EPOC headset in his research. “We can now show people a series of different images, while recording EEG, to train the system to detect specific emotions.”

While there has been a lot of work to interpret different signals from the brain, Emotiv-style devices are still only capable of detecting very crude emotions. A sad scene versus a happy scene can be discerned, for example — but not the type of subtle difference between being agitated or annoyed.

So while we may still be incapable of controlling iPhones with our minds, current developments in BCI technologies make the idea seem much less farfetched. Whether it’s moving a prosthetic limb or turning on a microwave, an era of thought-controlled brilliance — or perhaps laziness — is rapidly approaching.

“There is no question it will be part of our future,” says Leuthardt. “In the near term, you’ll see BCIs that help the most severely disabled people. Further into [the] future, you’ll see more consumer utilization of these technologies, and at some point, they’ll be as ubiquitous as cell phones.”