AI-powered ‘patients-on-a-chip’ could hold the future of drug development

With microscopic human tissues and advanced algorithms, testing new drugs in humans could become more efficient

Allison Parshall • February 16, 2022

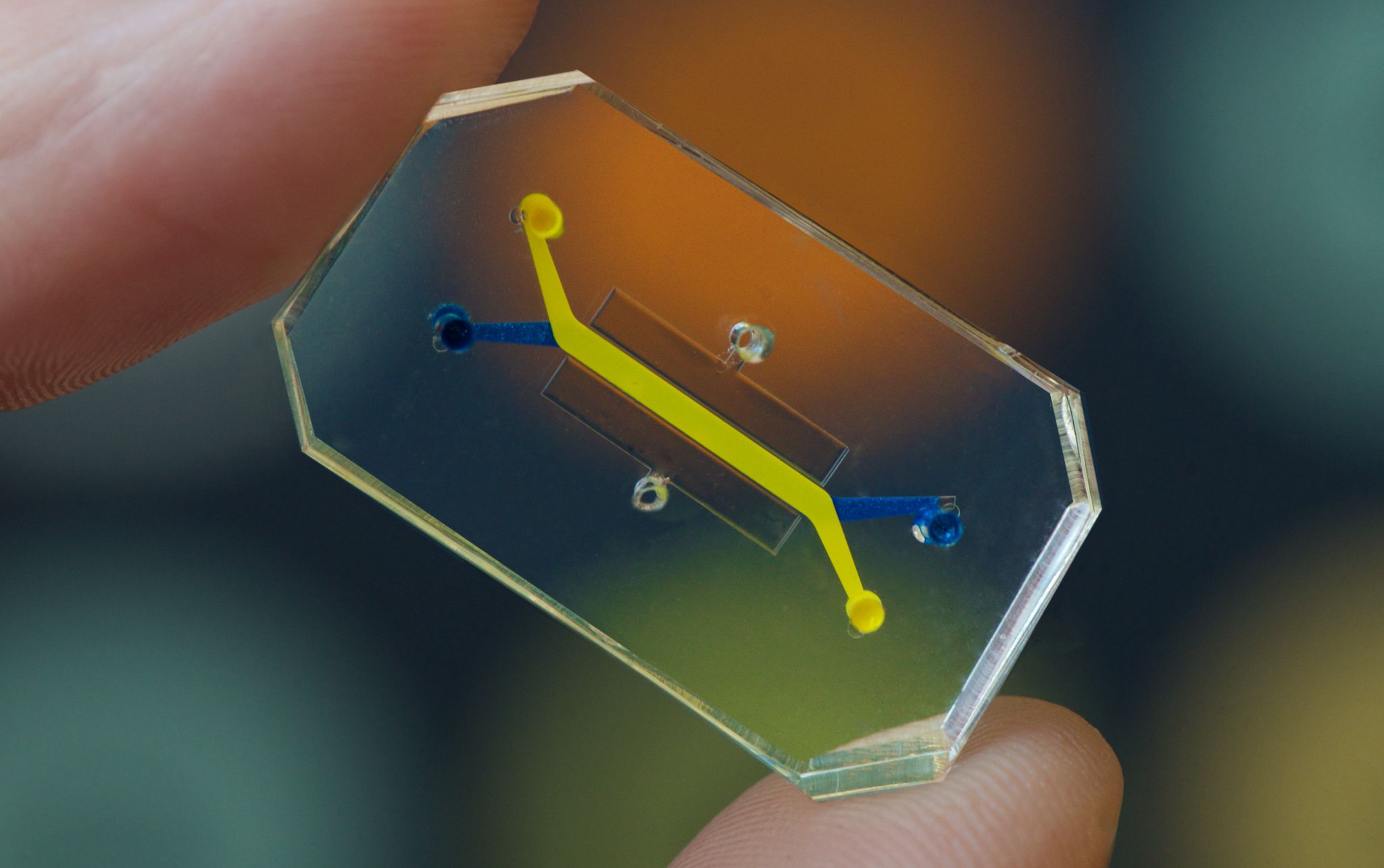

Living human cells placed in chips can be used to test the safety of drugs. [Credit: Harvard Wyss Institute for Biologically Inspired Engineering | Used with permission]

Heart-on-a-chip. Lung-on-a-chip. Brain-on-a-chip. You name the organ, scientists have likely found a way to recreate its tissues inside a small polymer rectangle, thanks to stem cells and some precise engineering.

In 2020, researchers united these so-called “organs-on-chips” into a “patient-on-a-chip” to simulate the human body in miniature. This technology has opened the door to testing drugs on interconnected, living human tissues, which researchers say could help to streamline the costly and inefficient drug development process.

This past October, a Boston and Tel Aviv-based pharmaceutical company launched a platform that takes patient-on-a-chip drug testing to the next level with the computing power of artificial intelligence, or AI. These computer algorithms are trained on massive amounts of data from the patient-on-a-chip systems, and could predict whether a drug will prove safe and effective in humans. Whether this technology will be adopted broadly by drug companies remains to be seen.

“Arguably, this is the biggest and most lucrative AI challenge of our time,” says Dr. Isaac Bentwich, CEO of Quris, the Israeli and American AI-pharmaceutical company. On its advisory board are Moderna co-founder Robert Langer, as well as Dr. Aaron Ciechenover, who was awarded the 2004 Nobel Prize in Chemistry.

Organ chips do more than just house human tissues grown from stem cells. They actively mimic the environment of the human body with air and blood flowing through tiny, layered channels, explains Dr. Donald Ingber, founding director of Harvard University’s Wyss Institute for Biologically Inspired Engineering. Certain tissues can be expanded and contracted by mechanisms within the chip to simulate the movement of lungs or intestines, Ingber says. Some can house immune cells and can even incorporate a living microbiome — the ecosystem of bacteria that helps you digest food and protects you from disease.

Connect these different organ chips together, allowing fluid to transfer between them, and you have a patient-on-a-chip, also called a microphysiological system.

“The level of clinical mimicry that we’re able to get nowadays is really kind of amazing,” says Harvard’s Ingber. In 2010, his lab pioneered a lung-on-a-chip, and in 2020, institute researchers combined multiple organ systems to form a whole “body” or “patient” on a chip. Because the chips contain living human tissues, experimenting on them can generate findings that are more relevant to humans than research on animals, Ingber says.

Recent advances have eased some of the challenges that previously stood in the way of the technology’s wide-scale adoption. One such challenge had been obtaining the living human cells that go inside the chips, wrote the editors of an organ-on-a-chip special issue of Stem Cell Reports in September. This has gotten easier as stem cells become more available and relatively affordable, allowing scientists to turn the general, non-specific cells of an individual into a specific lung or brain cell in the lab, Quris’ Bentwich says.

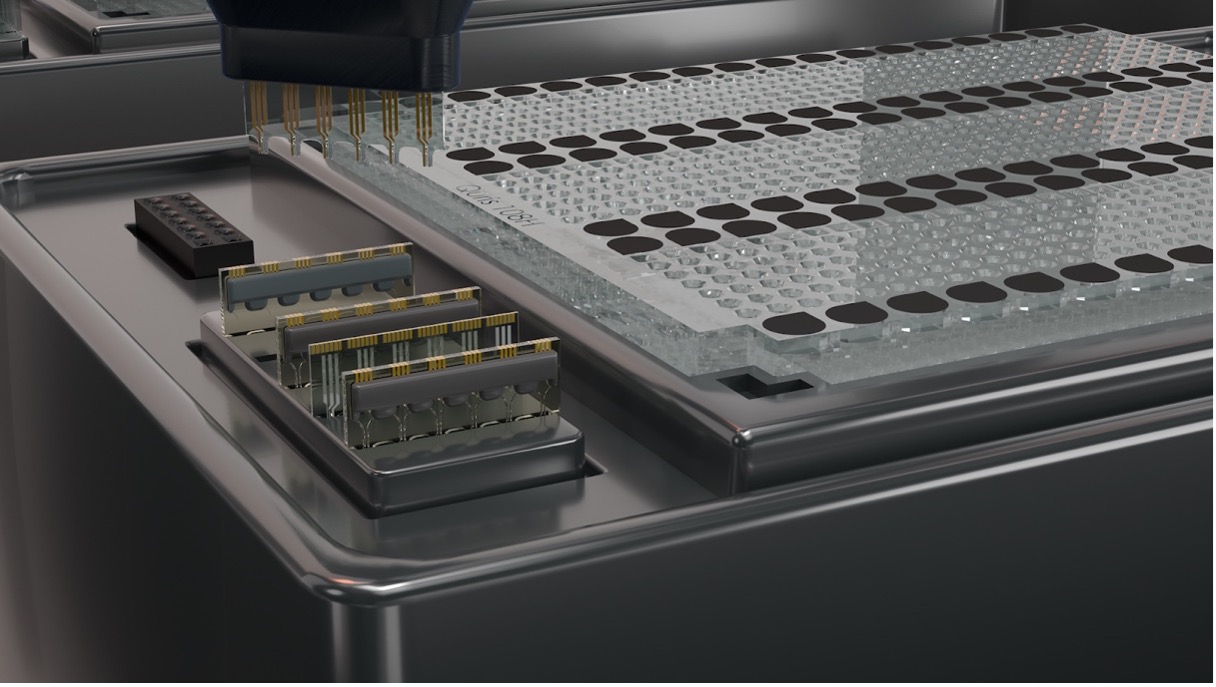

Another challenge has been the design of the chips themselves, Bentwich explains. To apply AI to the chips’ data, Quris’ scientists needed to develop more compact and cost-effective systems capable of running thousands of tests, he says. Artificial intelligence algorithms are only as good as the information that goes into them, so the systems that Quris has developed will need troves of quality data that reveal how different drugs interact with the various tissues in the chips.

Quris’ systems, which Bentwich reports are ten to 100 times smaller than existing chips, fit up to a hundred “patients” on just one chip. This capacity will allow scientists at the company to generate enough data to train their AI algorithm to make predictions about a drug’s safety. Quris is in the process of performing these tests, so they don’t yet have data on the program’s accuracy, Bentwich says.

Ingber also sees the integration of AI into organ chip systems as a boon to more effective drug development. “I think absolutely, that’s where the value lies,” he says. In the last few years, his lab has been moving toward this approach as well, applying advanced computational techniques with individual organ chips, although they haven’t yet extended the approach to entire patients-on-a-chip. “It’s a very, very complex thing to do,” he says.

A computer rendering of Quris’ drug testing setup, which sources say can contain as many as one hundred miniature organ systems on a single chip. [Credit: Quris.ai | Used with permission]

But such a complex tool may be a worthy investment for pharmaceutical companies wanting to predict which of their candidate drugs will succeed in clinical trials. Currently, the vast majority of drugs that enter clinical trials will ultimately fail — as many as 89%, according to one analysis cited by Bentwich. This low hit rate costs companies hundreds of millions of dollars and years of research for each failed candidate, he adds. The cost is then pushed on to consumers, with high price tags for drugs once they make it to the market.

Any improvement to this low success rate could mean massive savings from drug companies. Whether these savings would be passed down to the consumer is unclear. But decreased development costs could potentially open the doors to new treatments for rare diseases as well as new antibiotics. These areas are neglected by most drug companies because massive development costs outweigh their limited marketability, says Bentwich.

Despite the potential for dramatic savings, drug companies are often slow to ditch established drug testing methods for something new. Ingber’s biotech company Emulate, Inc. sells organ chips to 19 of the 25 top pharmaceutical companies, but the new technology has not widely replaced animal tests, he says. “It’s very hard to take a risk on something new when you’re talking about losing $100 million” if that risk doesn’t pay off, Ingber says. The transition away from animal models will come gradually as companies and regulating agencies gain confidence in these new methods, he adds.

As Adrian Roth, who researches microphysiological systems at the Swiss pharmaceutical company Roche, puts it, “You just don’t want to be the one that is responsible for something going really bad by applying something totally new.” He stresses that, despite being over a decade old, “this is still a new technology. We still need to learn.” The more data that becomes available to directly compare animal and patient-on-a-chip testing, he says, the more companies will feel comfortable making the switch.

For some research questions, animal testing is likely still the best option, Ingber says. Certain elements of our bodies, such as the signaling pathways in our brains, are too complex for current patient-on-a-chip technology — although that could change as the systems grow more sophisticated, Ingber adds.

Other treatments and diseases cannot be tested well in animals, such as cancer immunotherapy, which depends on responses of the human immune system, Roth says. Here, drug developers may be more eager to pick up patient-on-a-chip testing. Faced with a choice between a new, lesser-known tool and “basically no tool at all,” most drug companies will prefer to give the chips a try, says Roth.

Whether or not they are widely adopted, these microscopic systems provide a literal window into the inner workings of human cells and tissues, Ingber says. And as they generate more and more data on the molecular impact of drugs on our bodies, he says, artificial intelligence will play an important role in turning that data into meaningful insights.